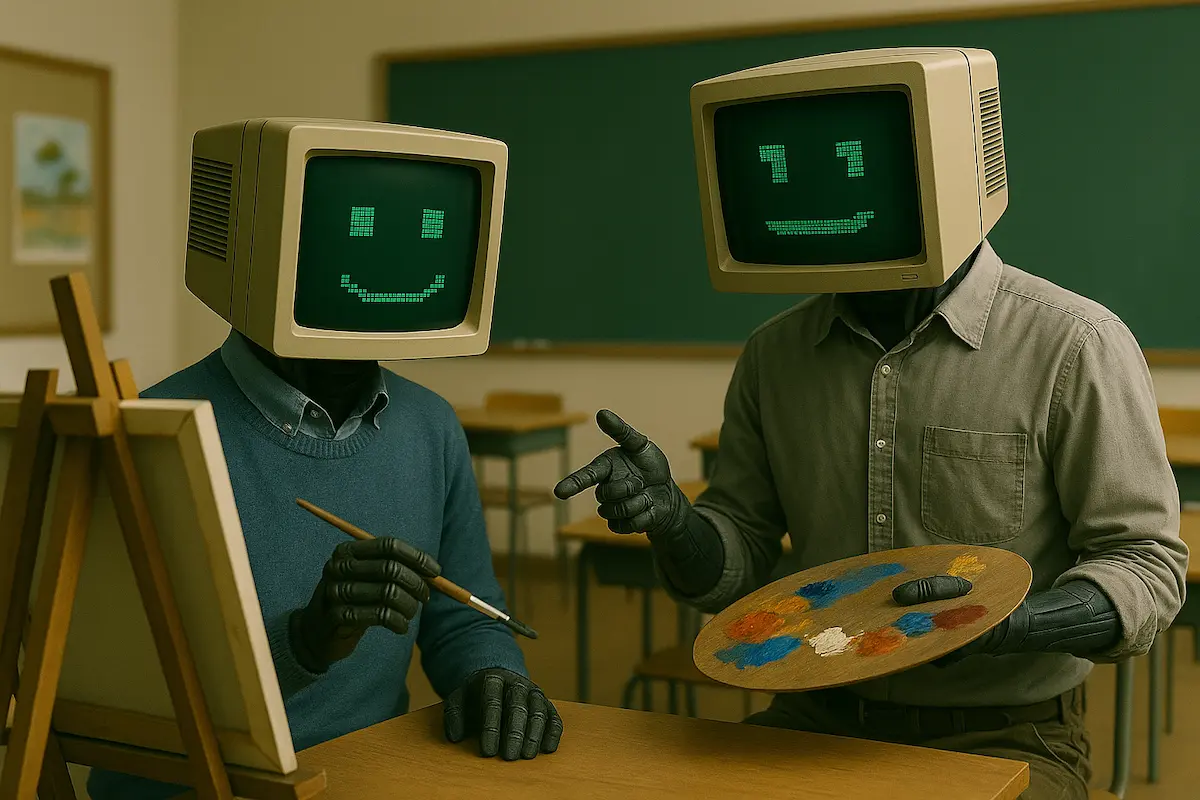

The AI That's Teaching Itself to Draw – And All Your Favorite Games May Depend On It

Discover how self-learning AI art models are shaping the future of game development and digital design. Explore emerging tools like FLUX and RCG, their impact on asset generation, and how they counter the "AI theft" debate.

The AI That’s Teaching Itself to Draw – And All Your Favorite Games May Depend On It

Artificial intelligence has begun creating art autonomously. Using self-supervised learning, models can now teach themselves to draw, paint, or generate visuals without needing labeled datasets. This shift is a game-changer for digital art and game development. In this article, we explore emerging AI tools and research that enable self-learning art creation, and how they’re starting to influence game design and creative production.

Self-Taught AI Artists (How Machines Learn to Draw)

One pioneering example is a reinforcement learning “neural painter” that learns to paint stroke by stroke based on visual similarity to a target image. It developed painting skills without any human stroke data—learning creativity through trial and error.

Another breakthrough from MIT and Meta AI introduces Representation-Conditioned Generation (RCG), a model that generates images by first creating a self-supervised visual representation and then using that as a condition to paint the final image. This framework bypasses the need for labeled datasets while achieving ImageNet-quality results (source).

Emerging Tools and Models Gaining Traction

- FLUX.1 by Black Forest Labs is an open-source text-to-image model featuring advanced in-context edits and high-quality outputs.

- DaVinci repurposed models like Stable Diffusion and FLUX to create game-ready 2D character art, downloadable for use in engines and VR systems.

- Platforms like Scenario and Leonardo AI enable developers to train models on their own concept art and game assets, ensuring consistency and originality.

- Google’s Unbounded project builds an entire game world in real time, with AI generating both graphics and mechanics within an “infinite sandbox environment.”

Impact on Video Game Development and Design

AI-generated assets are already appearing in game development pipelines. For example, Rec Room’s “Fractura” used ChatGPT for lore, Midjourney and DALL·E for concept art, and then converted them to 3D models using Shap-E. Even the skybox was AI-generated via Blockade Labs (source).

Style transfer and environment design also benefit: NVIDIA’s GauGAN and Stability AI’s Stable Fast 3D allow artists to generate textures from sketches or single images, enabling rapid prototyping.

AI is also evolving procedural content generation. Where games like No Man’s Sky provided basic procedural worlds, AI-driven models can now generate unique quests, dialogues, and art tailored to player behavior, offering unprecedented depth.

Addressing the “AI Art is Theft” Argument

A common ethical concern is that AI art copies existing artists’ work. While older models trained on scraped image datasets do risk replication, self-supervised models (like RCG) generate visuals based on abstract learned patterns rather than copying specific artworks.

Tools like Scenario and Leonardo AI allow creators to train using only their own assets—ensuring ownership and originality in derived works. This framework supports creators’ IP rights and positions AI as a creative ally, not a plagiarist.

Citations

- MIT & Meta AI, Representation-Conditioned Generation: https://openreview.net/forum?id=CGcA4F5nlz

- FLUX.1 on Black Forest Labs: https://bfl.ai/flux-1-tools

- DaVinci Game Character AI: https://github.com/ai-da-vinci

- Scenario AI Platform: https://www.scenario.com

- Leonardo AI: https://leonardo.ai

- Google’s Unbounded: https://shorturl.at/xSG7q

- Rec Room Fractura: https://recroom.com/blog/fractura

- Blockade Labs Skybox: https://skybox.blockadelabs.com

- NVIDIA GauGAN: https://www.nvidia.com/en-us/research/ai-playground/gaugan/

- Stable Fast 3D from Stability AI: https://stability.ai/news/stable-fast-3d-launch